AI browsers are rapidly becoming major risk to cybersecurity

Emerging threats and security considerations in the era of advanced browser technologies

Takeaways

- AI browsers introduce unique cybersecurity risks, including susceptibility to prompt injection attacks that can extend beyond the browser itself.

- Malicious prompts could lead to data exfiltration and credential theft, potentially compromising entire workflows.

- Many end users remain unaware of the potential dangers and download AI browsers without considering security implications.

- Organizations need to educate users and consider implementing stricter controls to prevent unauthorized software downloads.

- Cybersecurity professionals should proactively collaborate with AI advocates to establish best practices for responsible AI adoption.

- Balancing innovation with security is crucial, and early engagement may help set positive examples for safe AI usage.

As a new type of browser infused with artificial intelligence (AI) capabilities start to become more widely available, significant security concerns are starting to emerge.

Like most AI tools, this new type of browser is susceptible to prompt injection attacks. However, the issue is these AI browsers are being connected to a wide range of applications that make it possible to extend the reach of a prompt injection attack well beyond the browser.

For example, a malicious prompt injected to content accessed by an AI browser might direct it to exfiltrate data from an application and then forward it on to an external site using a messaging service. The root cause of the issue is that unlike a human who might, hopefully, recognize suspicious URLs, spelling errors and unusual layouts that are indicative of a malicious web site, AI browsers don’t make those distinctions. More troubling still, the credentials of an end user could be stolen, enabling malicious actors to use their AI browser to commandeer an entire workflow.

Unfortunately, while many end users are enthusiastically downloading these AI browsers, they are for the most part blissfully ignorant or, in some cases willfully oblivious, of the cybersecurity implications.

Proactive steps for safeguarding AI adoption

Of course, a large number of organizations have policies and controls in place that prevent end users from downloading software that has not been approved, but the majority do not. Cybersecurity and IT professionals that work for those organizations should make a concerted effort to, at the very least, make sure every end user clearly understands the potential risks. At the very most, there might be no time like the present to implement some more stringent controls.

In fact, cybersecurity professionals would be well advised to remind users that proponents of an AI philosophy that advocates for going fast and breaking things are not going to be around to help clean up the mess they helped make. Their sole goal is to spur as much adoption of their tools and platforms as quickly as possible regardless of the level of risk to an organization that might create. The challenge is that cybersecurity professionals are once again in the uncomfortable position of urging caution in the face of a massive wave of exuberance.

Sadly, if history is any guide there will need to be a very large number of cybersecurity incidents before end users start to fully appreciate the peril. Cybercriminals are themselves still coming up to speed on prompt injection tactics and techniques, but as most cybersecurity professionals already well know: If it can be imagined, someone is already trying it.

Balancing innovation with security

In the meantime, rather than simply waiting for the inevitable issues to arise, cybersecurity teams should be proactively engaging AI advocates within their organization. The more responsible they are, the better the chances are that the rest of the organization might even one day adopt a set of best practices for safely using AI. Those leading-edge users of AI, in fact, might set the example for all that follow.

Of course, there are always going to be rogue end users who adopt AI tools and applications without much of a second thought, alongside any number of shadow IT technologies they also regularly employ. The only difference in the age of AI is the level of risk to the organization is now several orders of magnitude greater.

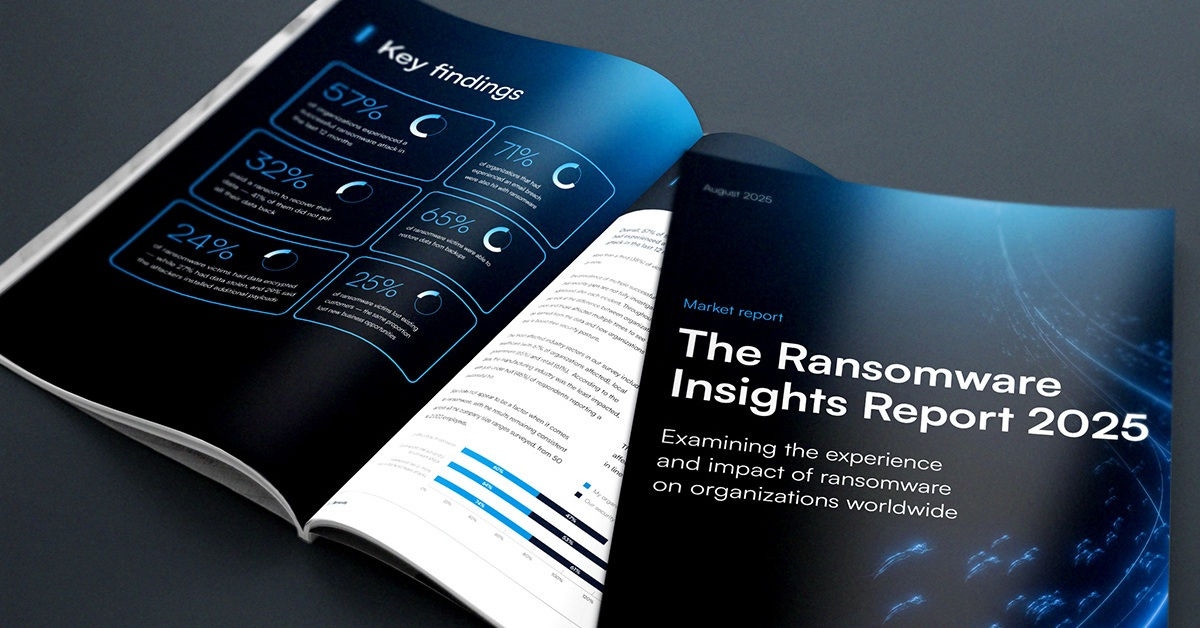

O Relatório de Perspetivas sobre Ransomware 2025

Principais conclusões sobre a experiência e o impacto do ransomware nas organizações em todo o mundo

Subscreva o Blogue Barracuda.

Inscreva-se para receber destaques sobre ameaças, comentários do setor e muito mais.

Segurança de Vulnerabilidades Geridas: Remediação mais rápida, menos riscos, conformidade mais fácil

Veja como pode ser fácil encontrar as vulnerabilidades que os cibercriminosos querem explorar